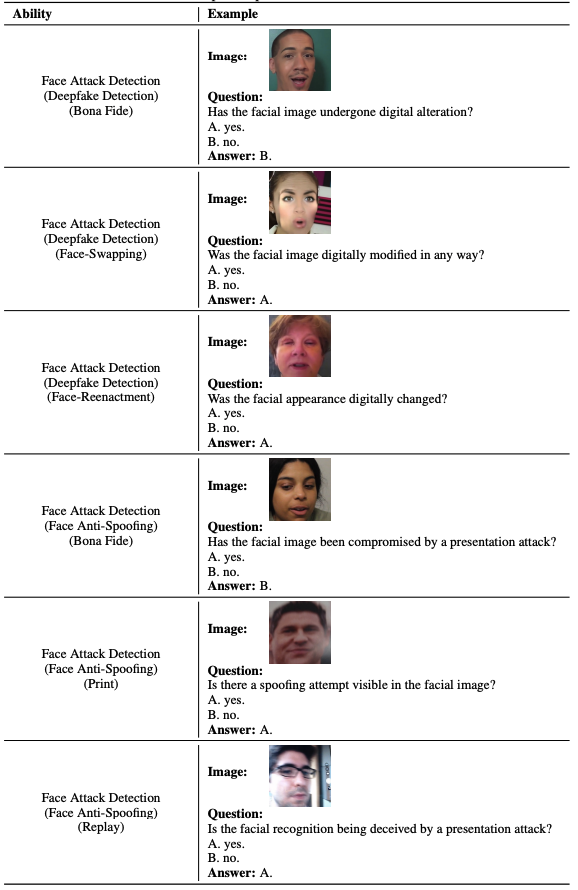

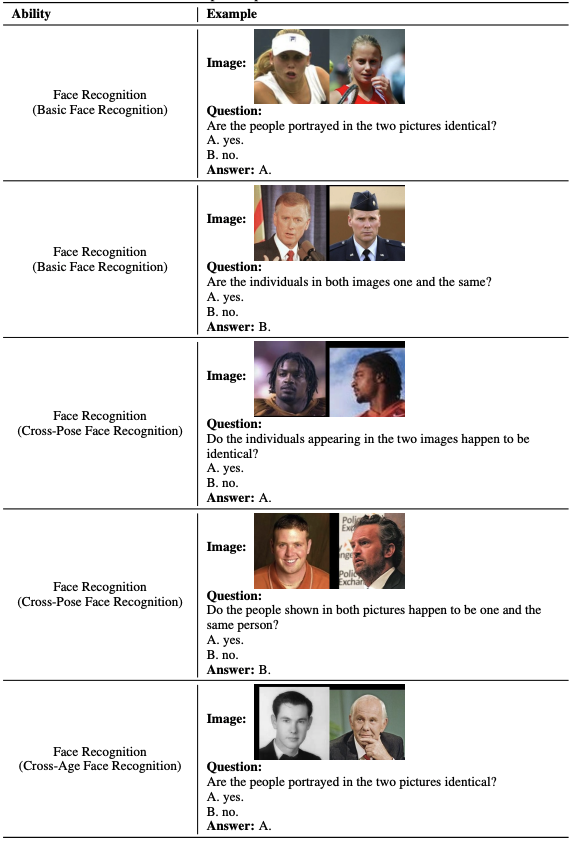

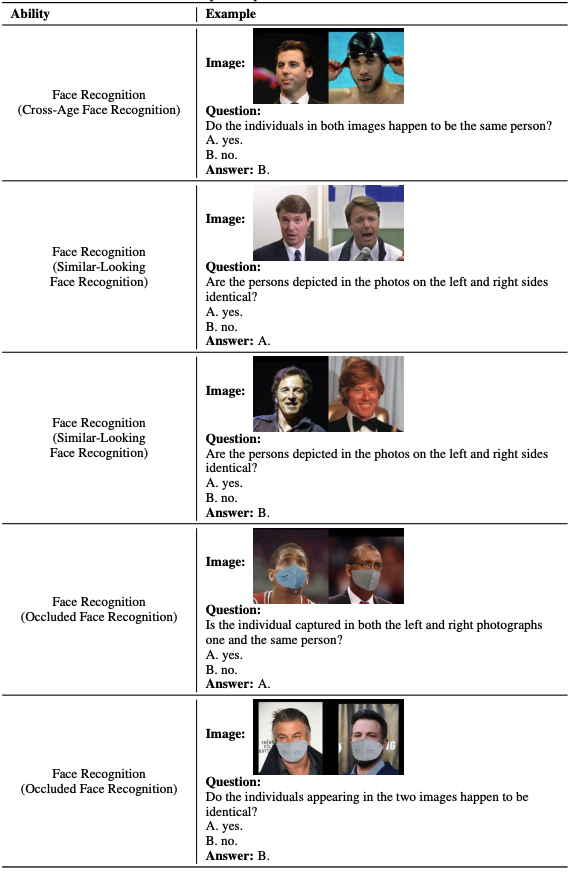

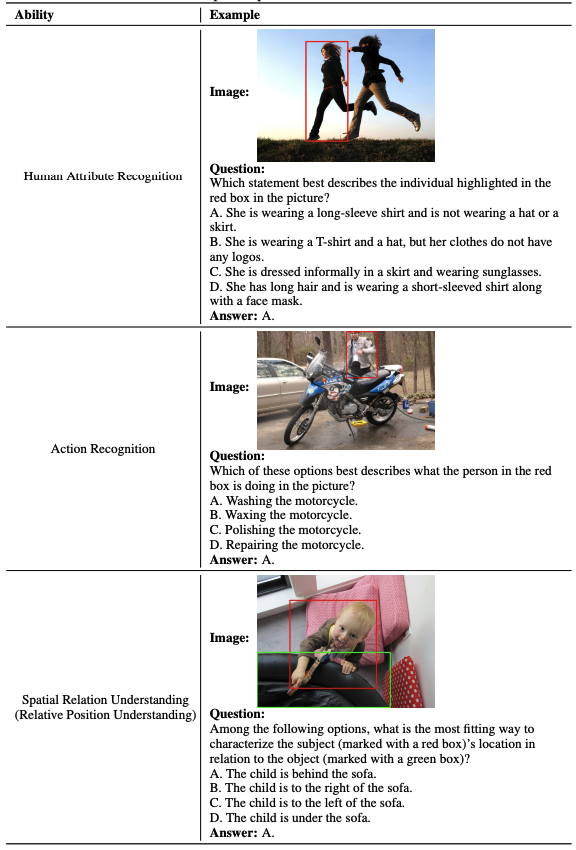

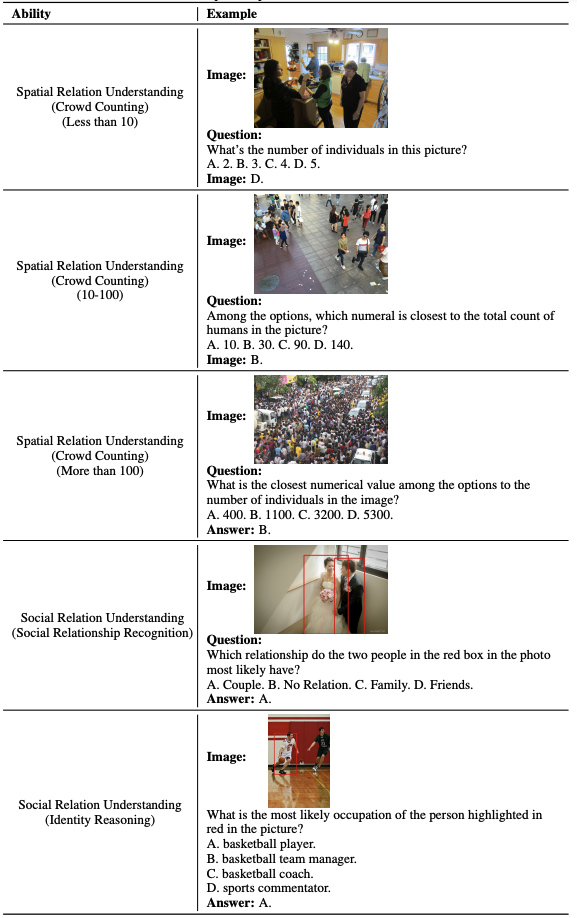

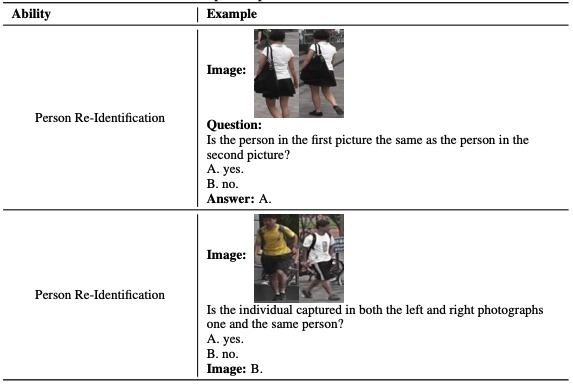

Faces and humans are crucial elements in social interaction and are widely included in everyday photos and videos. Therefore, a deep understanding of faces and humans will enable multi-modal assistants to achieve improved response quality and broadened application scope. Currently, the multi-modal assistant community lacks a comprehensive and scientific evaluation of face and human understanding abilities. In this paper, we first propose a hierarchical ability taxonomy that includes three levels of abilities. Then, based on this taxonomy, we collect images and annotations from publicly available datasets in the face and human community and build a semi-automatic data pipeline to produce problems for the new benchmark. Finally, the obtained Face-Human-Bench includes a development set and a test set, each with 1800 problems, supporting both English and Chinese. We conduct evaluations over 25 mainstream multi-modal large language models (MLLMs) with our Face-Human-Bench, focusing on the correlation between abilities, the impact of the relative position of targets on performance, and the impact of Chain of Thought (CoT) prompting on performance. We also explore which abilities of MLLMs need to be supplemented by specialist models.

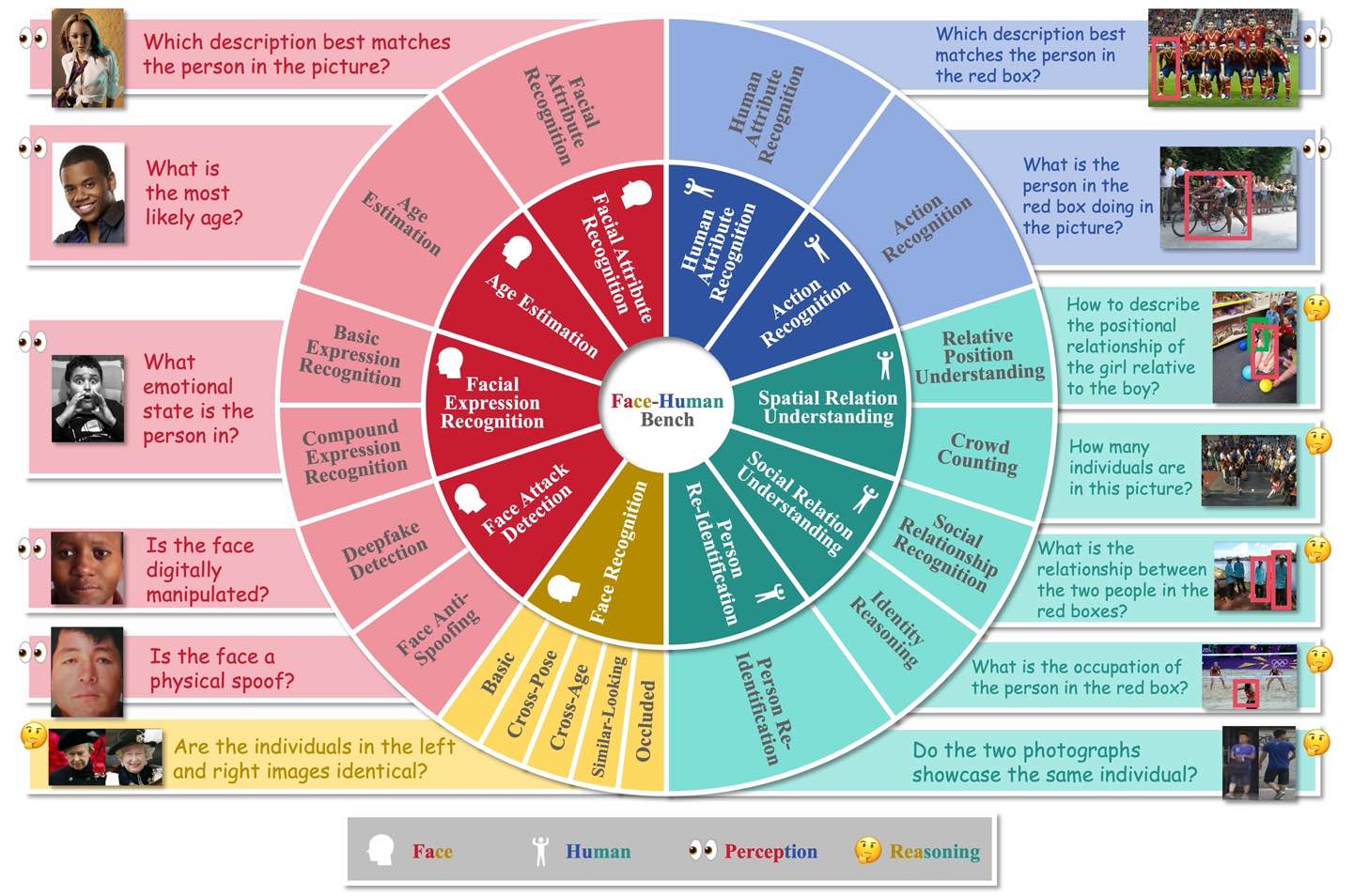

Overview diagram of

Face-Human-Bench

Face-Human-Bench

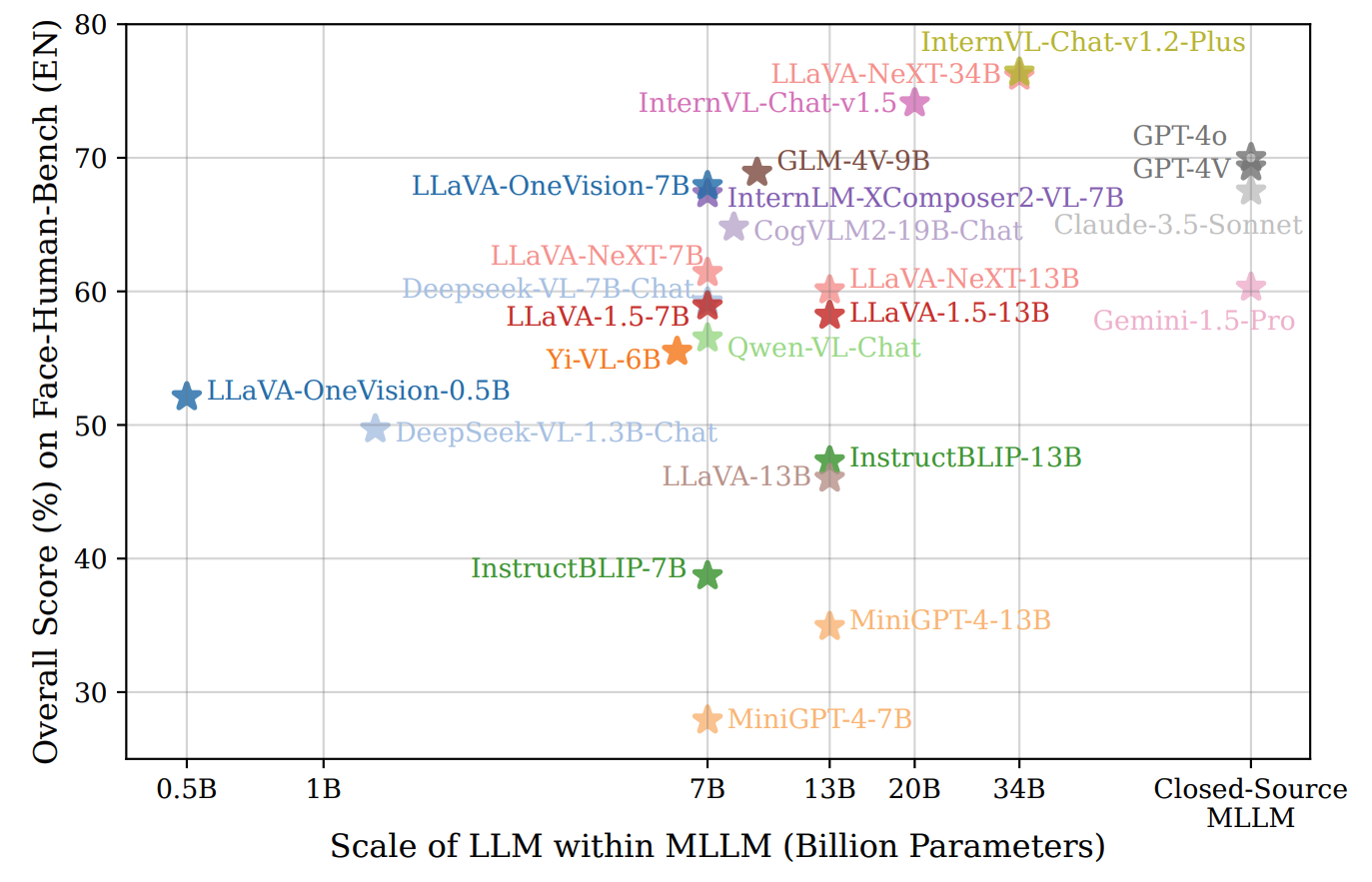

Leaderboard of MLLMs on

Face-Human-Bench (EN)

Face-Human-Bench (EN)

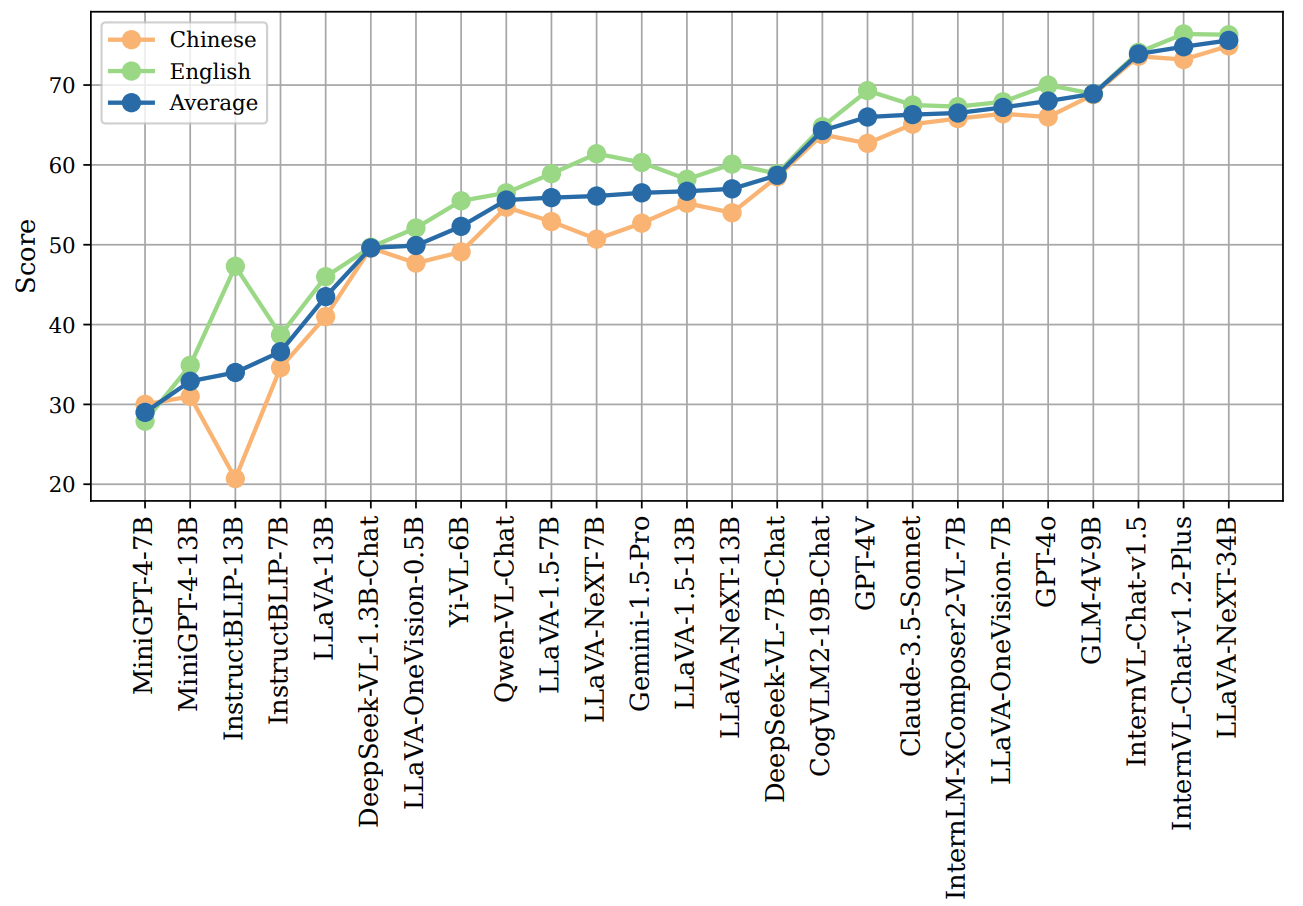

Comparation for the performance of different MLLMs on English and Chinese versions

of the

Face-Human-Bench

Face-Human-Bench

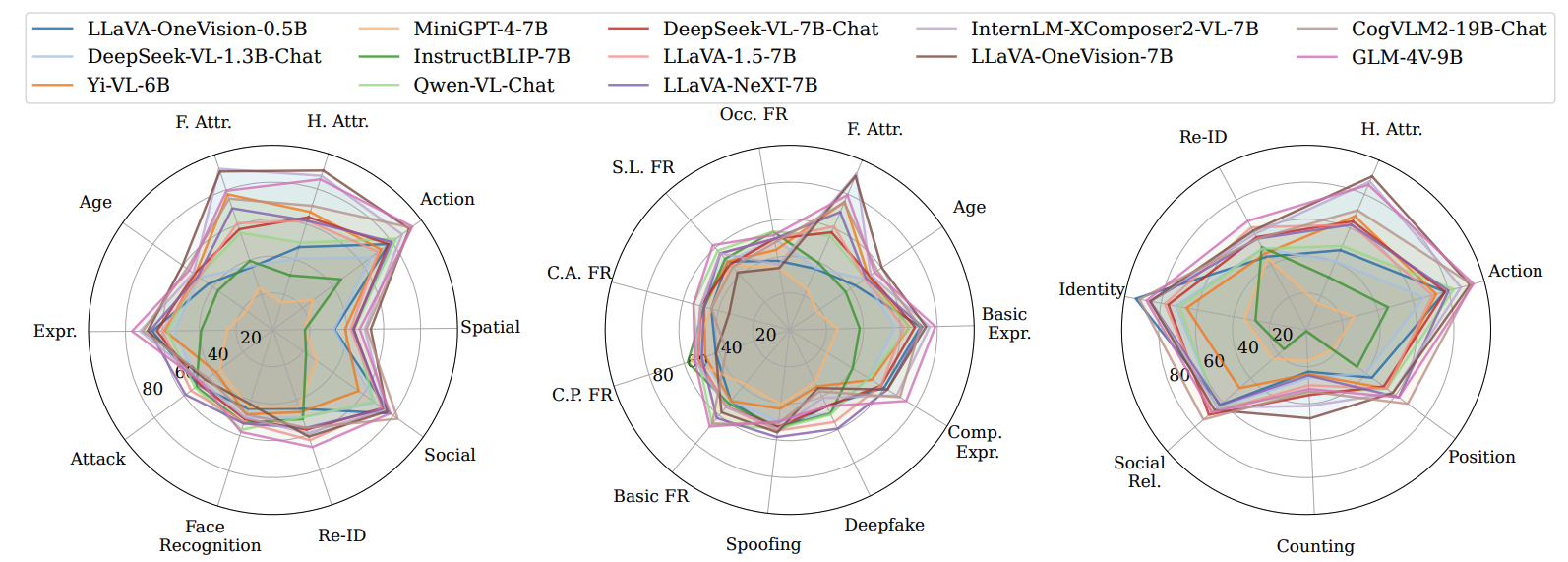

Face-Human-Bench (EN)

Face-Human-Bench (EN)

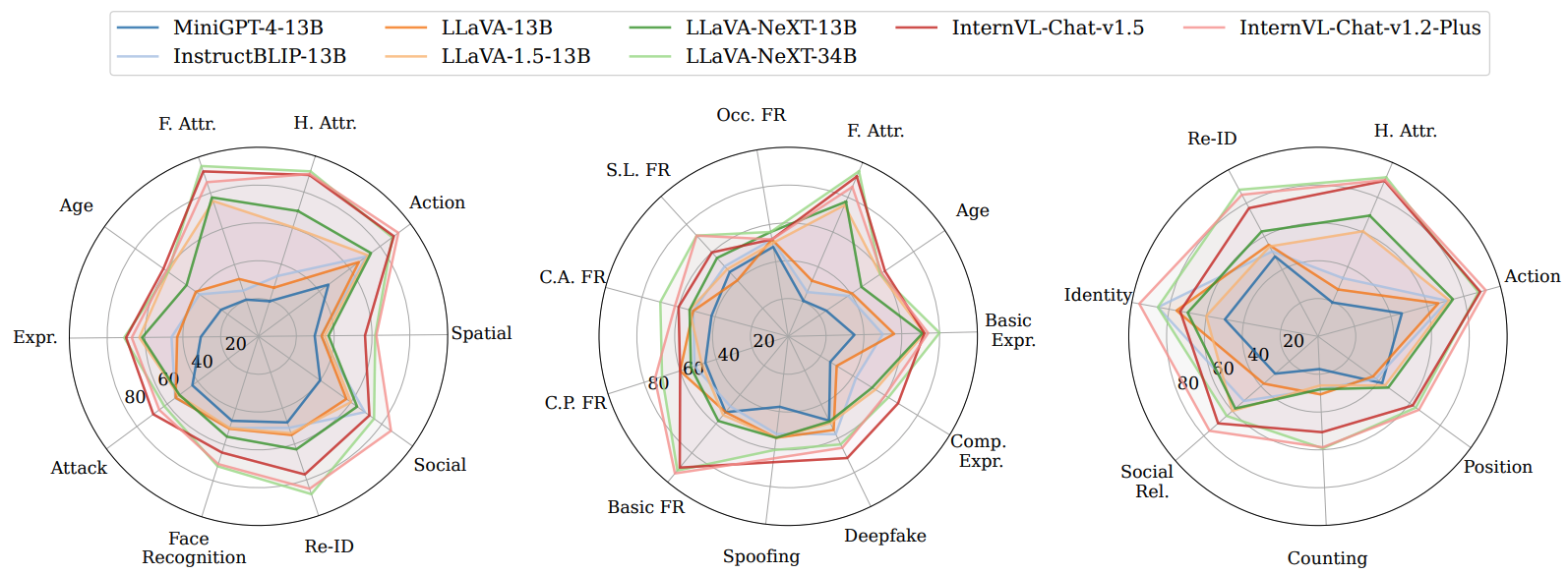

Performance of open-source MLLMs with LLM parameter scales below 10B on L2 and L3 abilities

Performance of open-source MLLMs with LLM parameter scales above 10B on L2 and L3 abilities

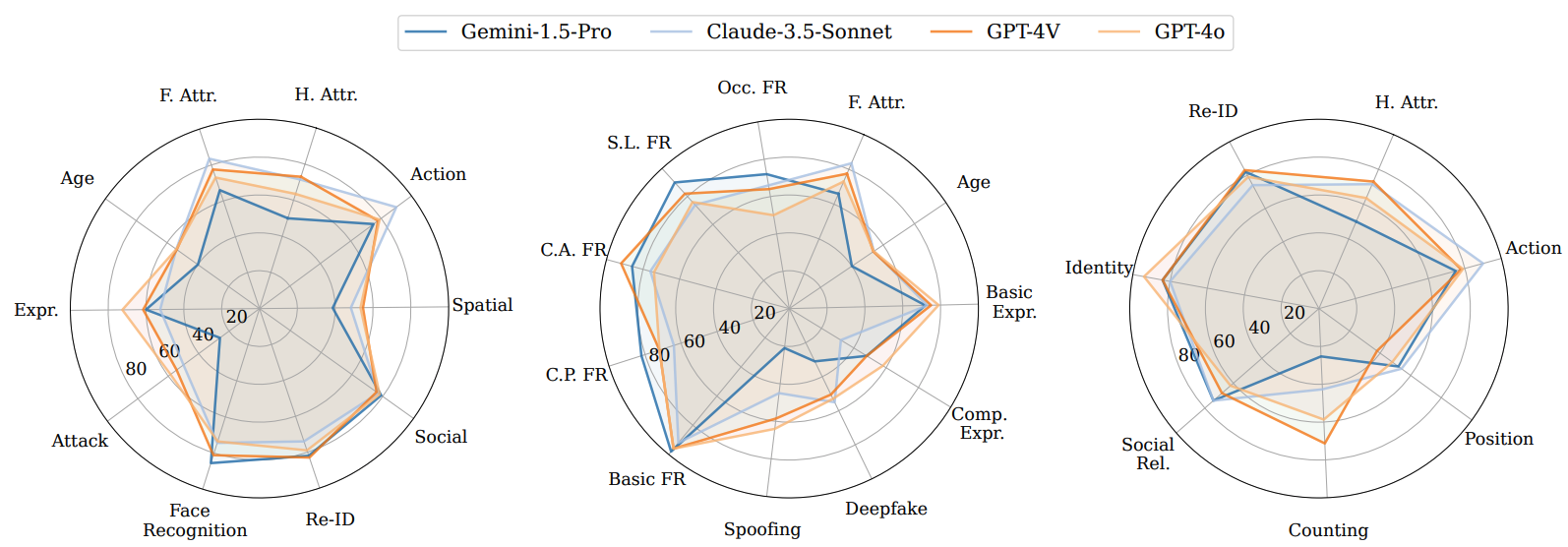

Performance of closed-source MLLMs on L2 and L3 abilities

@article{qin2025face,

title={Face-Human-Bench: A Comprehensive Benchmark of Face and Human Understanding for Multi-modal Assistants},

author={Qin, Lixiong and Ou, Shilong and Zhang, Miaoxuan and Wei, Jiangning and Zhang, Yuhang and Song, Xiaoshuai and Liu, Yuchen and Wang, Mei and Xu, Weiran},

journal={arXiv preprint arXiv:2501.01243},

year={2025}

}